Preparing for machine learning interviews is hard. You can memorize 200 questions and answers and still encounter questions in the interview that you are not prepared for.

That’s why this article takes a different approach. Instead of going over dozens of individual questions, we’ll cover the 4 cat...

Questions about Feature Selection commonly appear in data science interviews. In this video, I’ll cover the usefulness of feature selection, how to use feature selection with over 10,000 features, how to calculate feature importance, and the pros and cons of various selection methods!

Questions about Ensemble Methods frequently appear in data science interviews. In this video, I’ll go over various examples of ensemble learning, the advantages of boosting and bagging, how to explain stacking, and more!

Handling categorical data in machine learning projects is a very common topic in data science interviews. In this video, I’ll cover the difference between treating a variable as a dummy variable vs. a non-dummy variable, how you can deal with categorical features when the number of levels is very la...

K-Means is one of the most popular machine learning algorithms you’ll encounter in data science interviews. In this video, I’ll explain what k-means clustering is, how to select the “k” in k-means, show you how to implement k-means from scratch, and go over the main pros and cons of this method.

Imbalanced data is one of the most common machine learning problems you’ll come across in data science interviews. In this video, I cover what an imbalanced dataset is, what disadvantages it presents, and how to deal with imbalanced data when data contains only 1% of the minority class.

Regularization is a machine learning technique that introduces a regularization term to the loss function of a model in order to improve the generalization of a model. In this video, I explain both L1 and L2 regularizations, the main differences between the two methods, and leave you with helpful pr...

Random Forest is one of the most useful pragmatic algorithms for fast, simple, flexible predictive modeling. In this video, I dive into how Random Forest works, how you can use it to reduce variance, what makes it “random,” and the most common pros and cons associated with using this method.

Questions about Gradient Boosting frequently appear in data science interviews. In this video, I cover what the Gradient Boosting method and XGBoost are, teach you how I would describe the architecture of gradient boosting, and go over some common pros and cons associated with gradient-boosted trees...

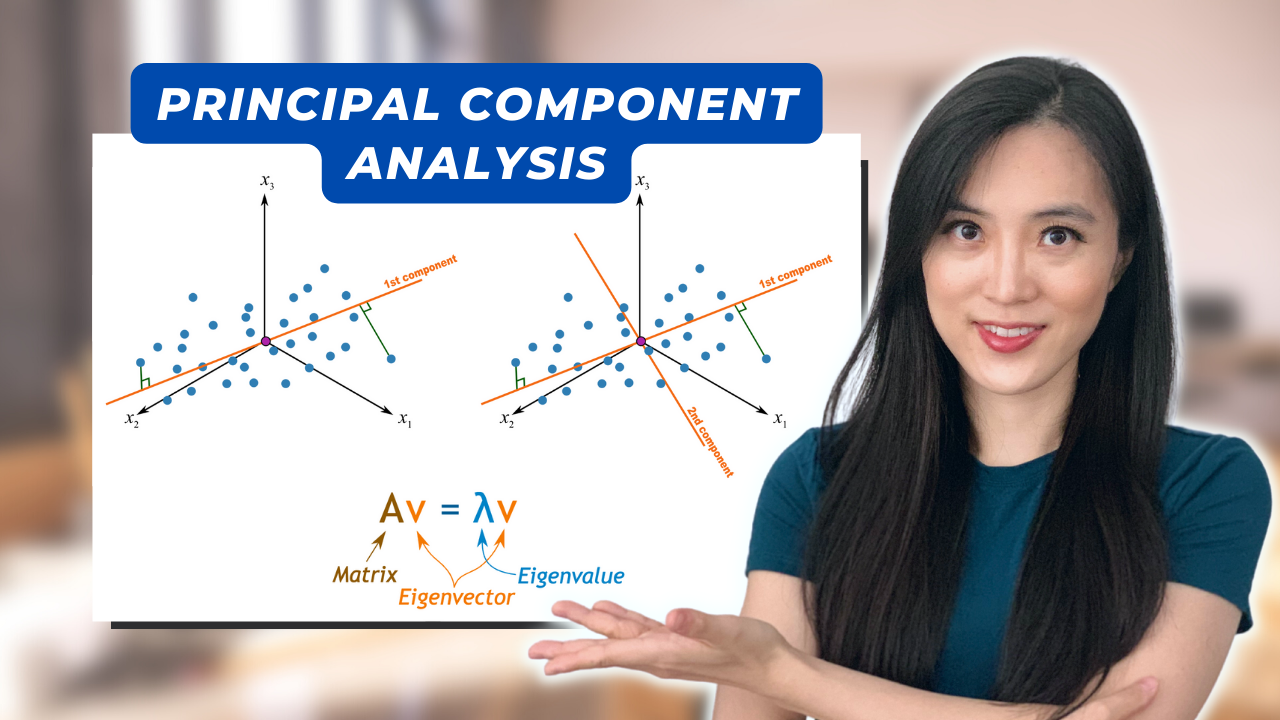

Questions about Principal Component Analysis commonly appear in data science interviews. In this video, I’ll explain what principal component analysis is, how it works, the problems you would use PCA for, and the pros and cons associated with PCA.